Introduction

Summarize a text using the Bart Large CNN pre-trained model:

curl "https://api.nlpcloud.io/v1/bart-large-cnn/summarization" \

-H "Authorization: Token 4eC39HqLyjWDarjtT1zdp7dc" \

-H "Content-Type: application/json" \

-X POST \

-d '{"text":"One month after the United States began what has become a troubled rollout of a national COVID vaccination campaign, the effort is finally gathering real steam. Close to a million doses -- over 951,000, to be more exact -- made their way into the arms of Americans in the past 24 hours, the U.S. Centers for Disease Control and Prevention reported Wednesday. That s the largest number of shots given in one day since the rollout began and a big jump from the previous day, when just under 340,000 doses were given, CBS News reported. That number is likely to jump quickly after the federal government on Tuesday gave states the OK to vaccinate anyone over 65 and said it would release all the doses of vaccine it has available for distribution. Meanwhile, a number of states have now opened mass vaccination sites in an effort to get larger numbers of people inoculated, CBS News reported."}'

import nlpcloud

client = nlpcloud.Client("bart-large-cnn", "4eC39HqLyjWDarjtT1zdp7dc")

# Returns a json object.

client.summarization("""One month after the United States began what has become a

troubled rollout of a national COVID vaccination campaign, the effort is finally

gathering real steam. Close to a million doses -- over 951,000, to be more exact --

made their way into the arms of Americans in the past 24 hours, the U.S. Centers

for Disease Control and Prevention reported Wednesday. That s the largest number

of shots given in one day since the rollout began and a big jump from the

previous day, when just under 340,000 doses were given, CBS News reported.

That number is likely to jump quickly after the federal government on Tuesday

gave states the OK to vaccinate anyone over 65 and said it would release all

the doses of vaccine it has available for distribution. Meanwhile, a number

of states have now opened mass vaccination sites in an effort to get larger

numbers of people inoculated, CBS News reported.""")

require 'nlpcloud'

client = NLPCloud::Client.new('bart-large-cnn','4eC39HqLyjWDarjtT1zdp7dc')

# Returns a json object.

client.summarization("One month after the United States began what has become a

troubled rollout of a national COVID vaccination campaign, the effort is finally

gathering real steam. Close to a million doses -- over 951,000, to be more exact --

made their way into the arms of Americans in the past 24 hours, the U.S. Centers

for Disease Control and Prevention reported Wednesday. That s the largest number

of shots given in one day since the rollout began and a big jump from the

previous day, when just under 340,000 doses were given, CBS News reported.

That number is likely to jump quickly after the federal government on Tuesday

gave states the OK to vaccinate anyone over 65 and said it would release all

the doses of vaccine it has available for distribution. Meanwhile, a number

of states have now opened mass vaccination sites in an effort to get larger

numbers of people inoculated, CBS News reported.")

package main

import (

"net/http"

"github.com/nlpcloud/nlpcloud-go"

)

func main() {

client := nlpcloud.NewClient(&http.Client{}, nlpcloud.ClientParams{

Model:"bart-large-cnn", Token:"4eC39HqLyjWDarjtT1zdp7dc", GPU:false, Lang:"", Async:false})

summary, err := client.Summarization(nlpcloud.SummarizationParams{Text: "One month after the United States began what has become a

troubled rollout of a national COVID vaccination campaign, the effort is finally

gathering real steam. Close to a million doses -- over 951,000, to be more exact --

made their way into the arms of Americans in the past 24 hours, the U.S. Centers

for Disease Control and Prevention reported Wednesday. That s the largest number

of shots given in one day since the rollout began and a big jump from the

previous day, when just under 340,000 doses were given, CBS News reported.

That number is likely to jump quickly after the federal government on Tuesday

gave states the OK to vaccinate anyone over 65 and said it would release all

the doses of vaccine it has available for distribution. Meanwhile, a number

of states have now opened mass vaccination sites in an effort to get larger

numbers of people inoculated, CBS News reported."})

...

}

const NLPCloudClient = require('nlpcloud');

const client = new NLPCloudClient({model:'bart-large-cnn', token:'4eC39HqLyjWDarjtT1zdp7dc'})

// Returns an Axios promise with the results.

// In case of success, results are contained in `response.data`.

// In case of failure, you can retrieve the status code in `err.response.status`

// and the error message in `err.response.data.detail`.

client.summarization({text:`One month after the United States began what has become a

troubled rollout of a national COVID vaccination campaign, the effort is finally

gathering real steam. Close to a million doses -- over 951,000, to be more exact --

made their way into the arms of Americans in the past 24 hours, the U.S. Centers

for Disease Control and Prevention reported Wednesday. That s the largest number

of shots given in one day since the rollout began and a big jump from the

previous day, when just under 340,000 doses were given, CBS News reported.

That number is likely to jump quickly after the federal government on Tuesday

gave states the OK to vaccinate anyone over 65 and said it would release all

the doses of vaccine it has available for distribution. Meanwhile, a number

of states have now opened mass vaccination sites in an effort to get larger

numbers of people inoculated, CBS News reported.`})

.then(function (response) {

console.log(response.data);

})

.catch(function (err) {

console.error(err.response.status);

console.error(err.response.data.detail);

});

require 'vendor/autoload.php';

use NLPCloud\NLPCloud;

$client = new \NLPCloud\NLPCloud('bart-large-cnn','4eC39HqLyjWDarjtT1zdp7dc');

# Returns a json object.

echo json_encode($client->summarization('One month after the United States began what has become a

troubled rollout of a national COVID vaccination campaign, the effort is finally

gathering real steam. Close to a million doses -- over 951,000, to be more exact --

made their way into the arms of Americans in the past 24 hours, the U.S. Centers

for Disease Control and Prevention reported Wednesday. That s the largest number

of shots given in one day since the rollout began and a big jump from the

previous day, when just under 340,000 doses were given, CBS News reported.

That number is likely to jump quickly after the federal government on Tuesday

gave states the OK to vaccinate anyone over 65 and said it would release all

the doses of vaccine it has available for distribution. Meanwhile, a number

of states have now opened mass vaccination sites in an effort to get larger

numbers of people inoculated, CBS News reported.'));

Summarize a text using the Bart Large CNN pre-trained model on GPU:

curl "https://api.nlpcloud.io/v1/gpu/bart-large-cnn/summarization" \

-H "Authorization: Token 4eC39HqLyjWDarjtT1zdp7dc" \

-H "Content-Type: application/json" \

-X POST \

-d '{"text":"One month after the United States began what has become a troubled rollout of a national COVID vaccination campaign, the effort is finally gathering real steam. Close to a million doses -- over 951,000, to be more exact -- made their way into the arms of Americans in the past 24 hours, the U.S. Centers for Disease Control and Prevention reported Wednesday. That s the largest number of shots given in one day since the rollout began and a big jump from the previous day, when just under 340,000 doses were given, CBS News reported. That number is likely to jump quickly after the federal government on Tuesday gave states the OK to vaccinate anyone over 65 and said it would release all the doses of vaccine it has available for distribution. Meanwhile, a number of states have now opened mass vaccination sites in an effort to get larger numbers of people inoculated, CBS News reported."}'

import nlpcloud

client = nlpcloud.Client("bart-large-cnn", "4eC39HqLyjWDarjtT1zdp7dc", gpu=True)

# Returns a json object.

client.summarization("""One month after the United States began what has become a

troubled rollout of a national COVID vaccination campaign, the effort is finally

gathering real steam. Close to a million doses -- over 951,000, to be more exact --

made their way into the arms of Americans in the past 24 hours, the U.S. Centers

for Disease Control and Prevention reported Wednesday. That s the largest number

of shots given in one day since the rollout began and a big jump from the

previous day, when just under 340,000 doses were given, CBS News reported.

That number is likely to jump quickly after the federal government on Tuesday

gave states the OK to vaccinate anyone over 65 and said it would release all

the doses of vaccine it has available for distribution. Meanwhile, a number

of states have now opened mass vaccination sites in an effort to get larger

numbers of people inoculated, CBS News reported.""")

require 'nlpcloud'

client = NLPCloud::Client.new('bart-large-cnn','4eC39HqLyjWDarjtT1zdp7dc', gpu: true)

# Returns a json object.

client.summarization("One month after the United States began what has become a

troubled rollout of a national COVID vaccination campaign, the effort is finally

gathering real steam. Close to a million doses -- over 951,000, to be more exact --

made their way into the arms of Americans in the past 24 hours, the U.S. Centers

for Disease Control and Prevention reported Wednesday. That s the largest number

of shots given in one day since the rollout began and a big jump from the

previous day, when just under 340,000 doses were given, CBS News reported.

That number is likely to jump quickly after the federal government on Tuesday

gave states the OK to vaccinate anyone over 65 and said it would release all

the doses of vaccine it has available for distribution. Meanwhile, a number

of states have now opened mass vaccination sites in an effort to get larger

numbers of people inoculated, CBS News reported.")

package main

import (

"net/http"

"github.com/nlpcloud/nlpcloud-go"

)

func main() {

client := nlpcloud.NewClient(&http.Client{}, nlpcloud.ClientParams{

Model:"bart-large-cnn", Token:"4eC39HqLyjWDarjtT1zdp7dc", GPU:true, Lang:"", Async:false})

summary, err := client.Summarization(nlpcloud.SummarizationParams{Text: "One month after the United States began what has become a

troubled rollout of a national COVID vaccination campaign, the effort is finally

gathering real steam. Close to a million doses -- over 951,000, to be more exact --

made their way into the arms of Americans in the past 24 hours, the U.S. Centers

for Disease Control and Prevention reported Wednesday. That s the largest number

of shots given in one day since the rollout began and a big jump from the

previous day, when just under 340,000 doses were given, CBS News reported.

That number is likely to jump quickly after the federal government on Tuesday

gave states the OK to vaccinate anyone over 65 and said it would release all

the doses of vaccine it has available for distribution. Meanwhile, a number

of states have now opened mass vaccination sites in an effort to get larger

numbers of people inoculated, CBS News reported."})

...

}

const NLPCloudClient = require('nlpcloud');

const client = new NLPCloudClient({model:'bart-large-cnn', token:'4eC39HqLyjWDarjtT1zdp7dc', gpu:true})

// Returns an Axios promise with the results.

// In case of success, results are contained in `response.data`.

// In case of failure, you can retrieve the status code in `err.response.status`

// and the error message in `err.response.data.detail`.

client.summarization({text:`One month after the United States began what has become a

troubled rollout of a national COVID vaccination campaign, the effort is finally

gathering real steam. Close to a million doses -- over 951,000, to be more exact --

made their way into the arms of Americans in the past 24 hours, the U.S. Centers

for Disease Control and Prevention reported Wednesday. That s the largest number

of shots given in one day since the rollout began and a big jump from the

previous day, when just under 340,000 doses were given, CBS News reported.

That number is likely to jump quickly after the federal government on Tuesday

gave states the OK to vaccinate anyone over 65 and said it would release all

the doses of vaccine it has available for distribution. Meanwhile, a number

of states have now opened mass vaccination sites in an effort to get larger

numbers of people inoculated, CBS News reported.`})

.then(function (response) {

console.log(response.data);

})

.catch(function (err) {

console.error(err.response.status);

console.error(err.response.data.detail);

});

require 'vendor/autoload.php';

use NLPCloud\NLPCloud;

$client = new \NLPCloud\NLPCloud('bart-large-cnn','4eC39HqLyjWDarjtT1zdp7dc', True);

# Returns a json object.

echo json_encode($client->summarization('One month after the United States began what has become a

troubled rollout of a national COVID vaccination campaign, the effort is finally

gathering real steam. Close to a million doses -- over 951,000, to be more exact --

made their way into the arms of Americans in the past 24 hours, the U.S. Centers

for Disease Control and Prevention reported Wednesday. That s the largest number

of shots given in one day since the rollout began and a big jump from the

previous day, when just under 340,000 doses were given, CBS News reported.

That number is likely to jump quickly after the federal government on Tuesday

gave states the OK to vaccinate anyone over 65 and said it would release all

the doses of vaccine it has available for distribution. Meanwhile, a number

of states have now opened mass vaccination sites in an effort to get larger

numbers of people inoculated, CBS News reported.'));

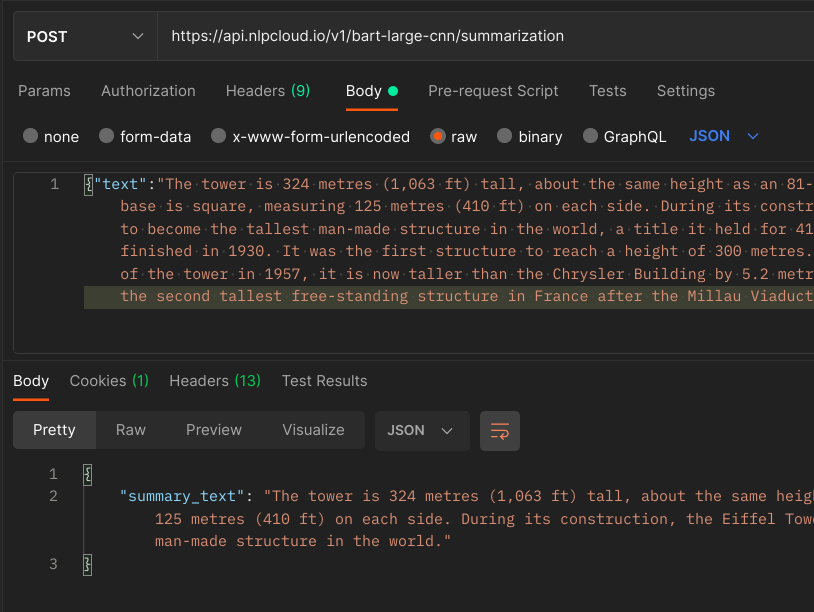

Output:

{

"summary_text": "Over 951,000 doses were given in the past 24 hours.

That's the largest number of shots given in one day since the rollout began.

That number is likely to jump quickly after the federal government gave

states the OK to vaccinate anyone over 65. A number of states have now

opened mass vaccination sites."

}

Welcome to the NLP Cloud API documentation.

Here is the list of use cases you can perform on the NLP Cloud API:

| Use Case | Model Used |

|---|---|

| Automatic Speech Recognition/Speech to Text: extract text from an audio or video file (see endpoint) | We use OpenAI's Whisper Large for speech to text in 97 languages. |

| Chatbot/Conversational AI: create an advanced chatbot (see endpoint) | We use in-house NLP Cloud models called ChatDolphin, and Fine-tuned LLaMA 3 70B. We also use Yi 34B by 01 AI, Dolphin Yi 34B by Eric Hartford, Mixtral 8x7B by Mistral AI, and Dolphin Mixtral 8x7B by Eric Hartford. This endpoint can leverage our multilingual add-on. |

| Classification: send a text, and let the model categorize the text for you in many languages (as an option you can give the candidate categories you want to assess) (see endpoint) | We use the Bart Large MNLI Yahoo Answers by Joe Davison, XLM Roberta Large XNLI by Joe Davison, an in-house NLP Cloud model called Fine-tuned LLaMA 3 70B, Yi 34B by 01 AI, and Mixtral 8x7B by Mistral AI. This endpoint can leverage our multilingual add-on. |

| Code Generation: generate source code out of a simple instruction, in any programming language (see endpoint) | We use in-house NLP Cloud models called ChatDolphin and Fine-tuned LLaMA 3 70B. We also use Dolphin Yi 34B by Eric Hartford and Dolphin Mixtral 8x7B by Eric Hartford. |

| Embeddings: calculate embeddings from a list of texts, in many languages (see endpoint) | We use Paraphrase Multilingual MPNet Base V2. |

| Headline Generation: send a text, and get a one sentence headline summarizing everything, in many languages (see endpoint) | We use T5 Base EN Generate Headline by Michal Pleban. This endpoint can leverage our multilingual add-on. |

| Grammar And Spelling Correction: remove the grammar and spelling errors from your text (see endpoint) | We use in-house NLP Cloud models called ChatDolphin, and Fine-tuned LLaMA 3 70B. We also use Dolphin Yi 34B by Eric Hartford, and Dolphin Mixtral 8x7B by Eric Hartford. This endpoint can leverage our multilingual add-on. |

| Image Generation: generate an image out of a simple text instruction (see endpoint) | We use Stable Diffusion. This endpoint can leverage our multilingual add-on |

| Intent Classification: detect the intent hidden behind a text (see endpoint) | We use in-house NLP Cloud models called ChatDolphin, and Fine-tuned LLaMA 3 70B. We also use Dolphin Yi 34B by Eric Hartford and Dolphin Mixtral 8x7B by Eric Hartford. This endpoint can leverage our multilingual add-on. |

| Keywords and Keyphrases Extraction: extract the main ideas in a text (see endpoint) | We use an in-house NLP Cloud model called Fine-tuned LLaMA 3 70B. This endpoint can leverage our multilingual add-on. |

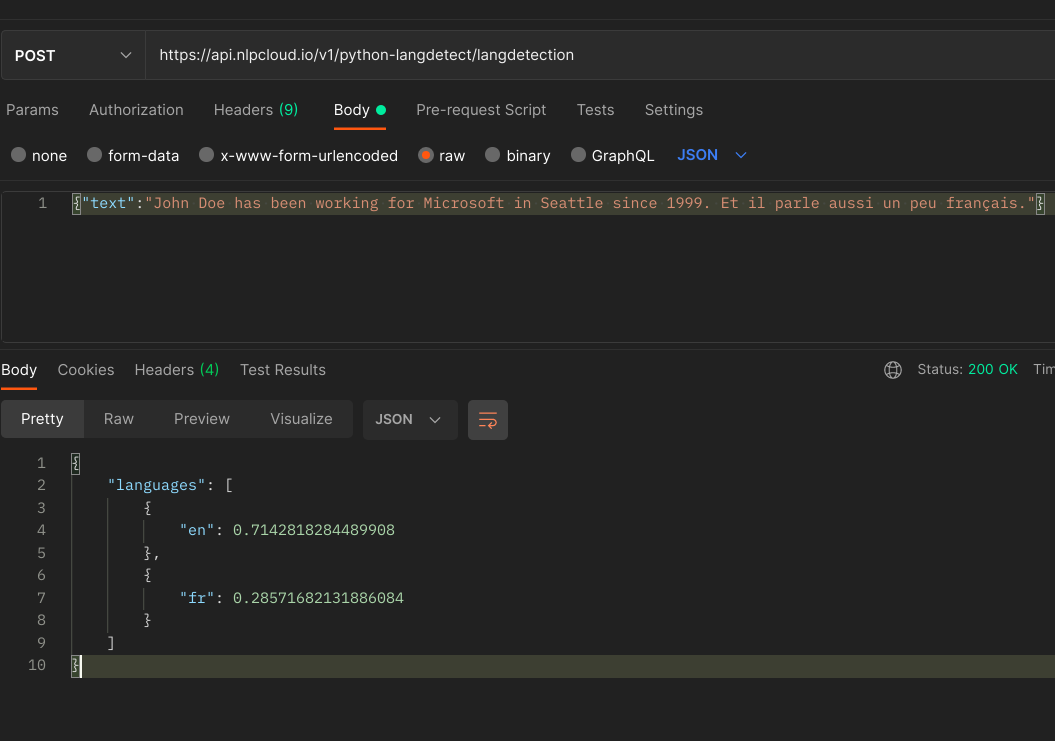

| Language Detection: detect one or several languages from a text (see endpoint) | We use Python's Langdetect library. |

| Lemmatization: extract lemmas from a text, in many languages (see endpoint) | All the large spaCy models are available (15 languages) and Megagon Lab's Ginza for Japanese |

| Named Entity Recognition (NER): extract and tag relevant entities from a text, like name, company, country... in many languages (see endpoint) | All the large spaCy models are available (15 languages), and Megagon Lab's Ginza for Japanese, and generative models with PyTorch and Jax. |

| Noun Chunks Extraction: extract noun chunks from a text, in many languages (see endpoint) | All the large spaCy models are available (15 languages) and Megagon Lab's Ginza for Japanese |

| Paraphrasing and rewriting: send a text, and get a rephrased version that has the same meaning but with new words (see endpoint) | We use an in-house NLP Cloud model called Fine-tuned LLaMA 3 70B. This endpoint can leverage our multilingual add-on. |

| Part-Of-Speech (POS) tagging: assign parts of speech to each word of your text, in many languages (see endpoint) | All the large spaCy models are available (15 languages) and Megagon Lab's Ginza for Japanese |

| Question answering: ask questions about anything (as an option you can give a context and ask specific questions about this context) in many languages (see endpoint) | We use an in-house NLP Cloud models called ChatDolphin, and Fine-tuned LLaMA 3 70B. We also use Dolphin Yi 34B by Eric Hartford, and Dolphin Mixtral 8x7B by Eric Hartford. This endpoint can leverage our multilingual add-on. |

| Semantic Search: search your own data, in many languages (see endpoint) | Create your own semantic search model. |

| Semantic Similarity: detect whether 2 pieces of text have the same meaning or not, in many languages (see endpoint) | We use Paraphrase Multilingual MPNet Base V2. |

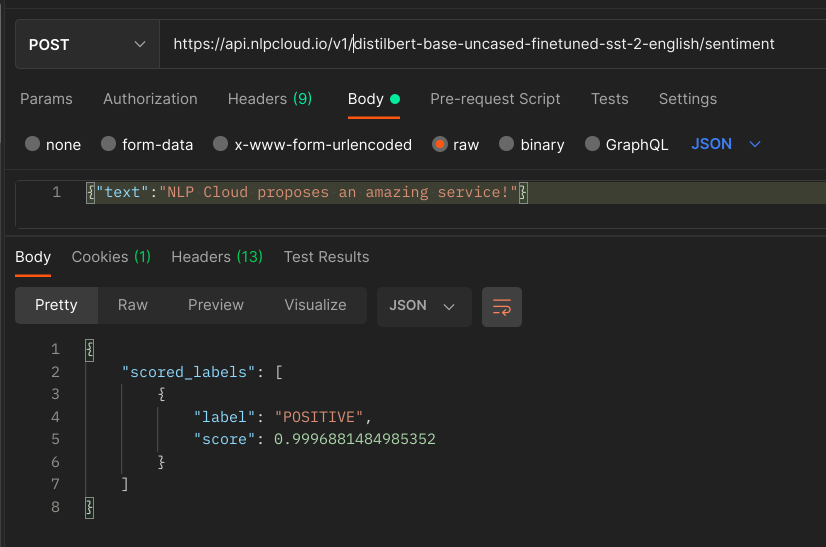

| Sentiment analysis: determine whether a text is rather positive or negative or detect emotions, in many languages (see endpoint) | We use DistilBERT Base Uncased Finetuned SST-2 and DistilBERT Base Uncased Emotion. This endpoint can leverage our multilingual add-on. |

| Speech Synthesis/Text To Speech: generate audio out of text (see endpoint) | We use Speech T5 by Microsoft to synthesize voice in English |

| Summarization: send a text, and get a smaller text keeping essential information only, in many languages (see endpoint) | We use Bart Large CNN by Meta, in-house NLP Cloud models called ChatDolphin and Fine-tuned LLaMA 3 70B, Dolphin Yi 34B by Eric Hartford, and Dolphin Mixtral 8x7B by Eric Hartford. This endpoint can leverage our multilingual add-on. |

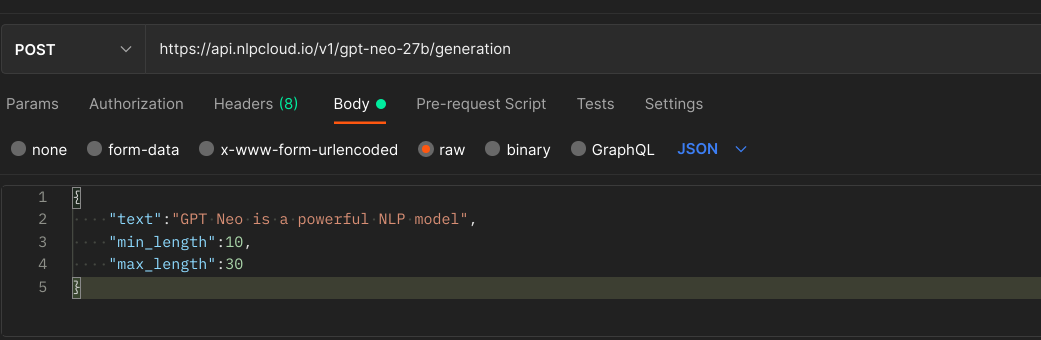

| Text generation: achieve any AI use case using generative models, in many languages (see endpoint) | We use in-house NLP Cloud models called ChatDolphin, and Fine-tuned LLaMA 3 70B. We also use Yi 34B by 01 AI, Dolphin Yi 34B by Eric Hartford, Mixtral 8x7B by Mistral AI, and Dolphin Mixtral 8x7B by Eric Hartford. This endpoint can leverage our multilingual add-on. |

| Tokenization: extract tokens from a text, in many languages (see endpoint) | All the large spaCy models are available (15 languages) and Megagon Lab's Ginza for Japanese |

| Translation: translate text from one language to another (see endpoint) | We use NLLB 200 3.3B by Meta for translation in 200 languages |

If not done yet, please retrieve an API key from your dashboard and don't hesitate to test our models on the playground. Also do not hesitate to contact us if needed: [email protected].

If you need to process non-English languages, we encourage you to either use our multilingual add-on or use a model that natively supports your language.

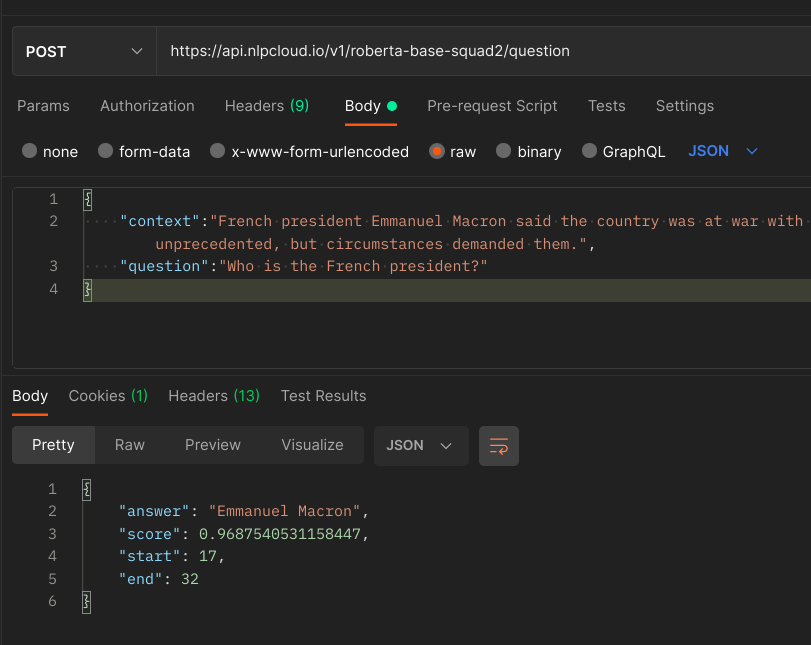

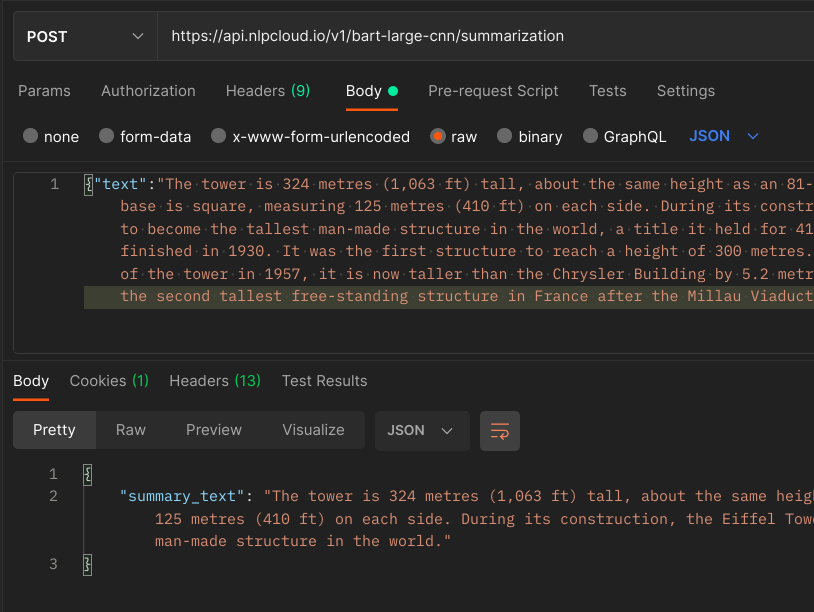

See on the right a full example about summarizing block of text, using Facebook's Bart Large CNN pre-trained model, both on CPU and GPU. And the same example below using Postman:

Not a programmer? See this tutorial about using NLP Cloud with the Bubble.io no-code platform.

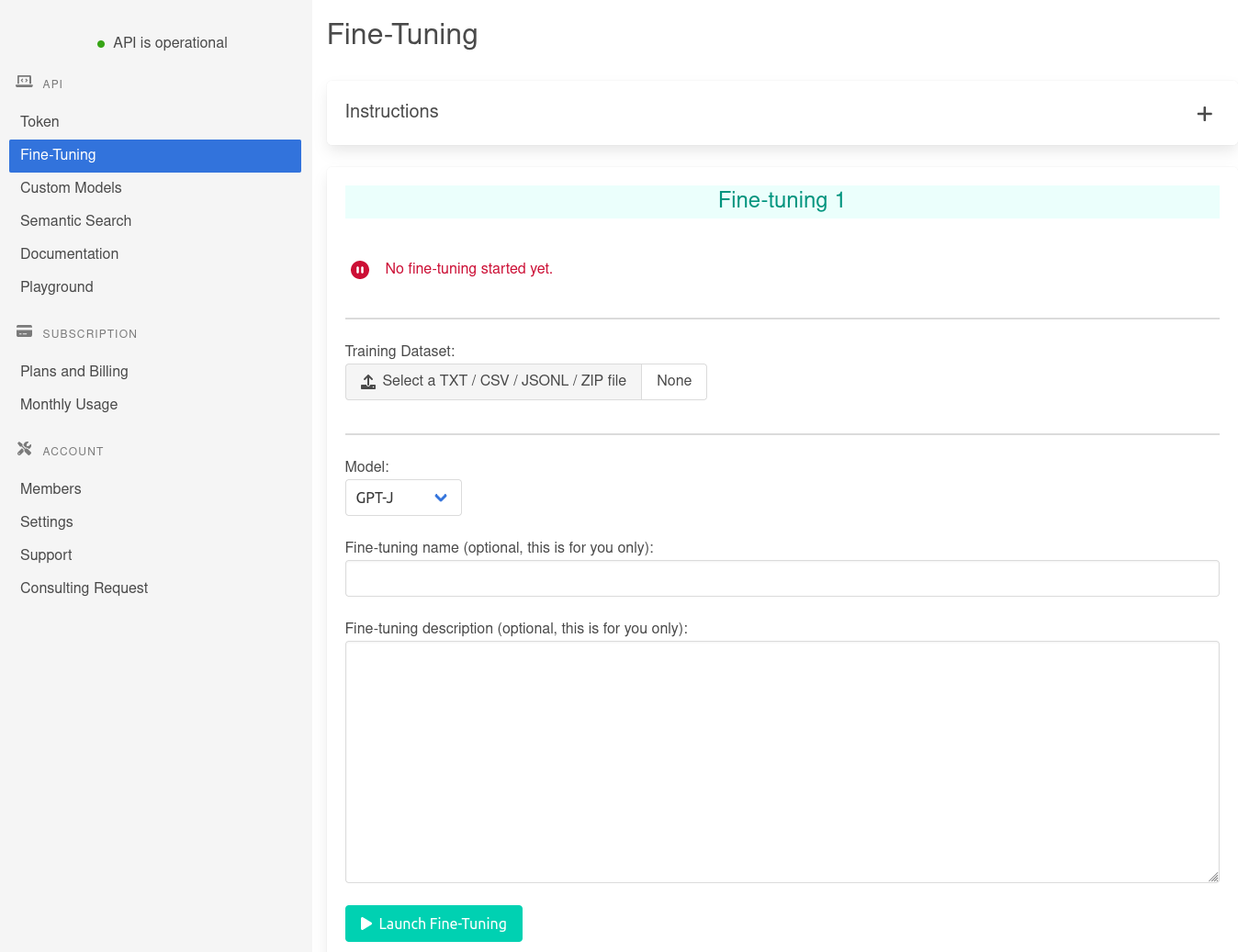

You can fine-tune your own models. You can also upload your own custom models in your dashboard.

In addition to this documentation, you can also read this introduction article and watch this introduction video.

We welcome every feedbacks about the API, the documentation, or the client libraries. Please let us know!

Set Up

Client Installation

If you are using one of our client libraries, here is how to install them.

Python

Install with pip.

pip install nlpcloud

More details on the source repo: https://github.com/nlpcloud/nlpcloud-python

Ruby

Install with gem.

gem install nlpcloud

More details on the source repo: https://github.com/nlpcloud/nlpcloud-ruby

Go

Install with go install.

go install github.com/nlpcloud/nlpcloud-go

More details on the source repo: https://github.com/nlpcloud/nlpcloud-go

Node.js

Install with NPM.

npm install nlpcloud --save

More details on the source repo: https://github.com/nlpcloud/nlpcloud-js

PHP

Install with Composer.

Create a composer.json file containing at least the following:

{"require": {"nlpcloud/nlpcloud-client": "*"}}

Then launch the following:

composer install

More details on the source repo: https://github.com/nlpcloud/nlpcloud-php

Authentication

Replace

with your token:

curl "https://api.nlpcloud.io/v1/<model>/<endpoint>" \

-H "Authorization: Token <token>"

import nlpcloud

client = nlpcloud.Client("<model>", "<token>")

require 'nlpcloud'

client = NLPCloud::Client.new('<model>','<token>')

package main

import (

"net/http"

"github.com/nlpcloud/nlpcloud-go"

)

func main() {

client := nlpcloud.NewClient(&http.Client, "<model>", "<token>", false, "")

...

}

const NLPCloudClient = require('nlpcloud');

const client = new NLPCloudClient({model:'<model>', token:'<token>'})

use NLPCloud\NLPCloud;

$client = new \NLPCloud\NLPCloud('<model>','<token>');

Add your API key after the Token keyword in an Authorization header. You should include this header in all your requests: Authorization: Token <token>. Alternatively you can also use Bearer instead of Token: Authorization: Bearer <token>.

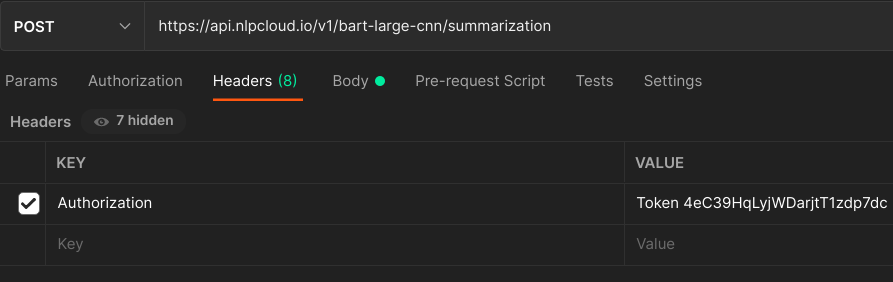

Here is an example using Postman (Postman is automatically adding headers to the requests. You should at least keep the Host header, otherwise you will get a 400 error.):

If not done yet, please get an API key in your dashboard.

All API requests must be made over HTTPS. Calls made over plain HTTP will fail. API requests without authentication will also fail.

Versioning

Replace

with the right API version:

curl "https://api.nlpcloud.io/<version>/<model>/<endpoint>"

# The latest API version is automatically set by the library.

# The latest API version is automatically set by the library.

// The latest API version is automatically set by the library.

// The latest API version is automatically set by the library.

// The latest API version is automatically set by the library.

The latest API version is v1.

The API version comes right after the domain name, and before the model name.

Encoding

POST JSON data:

curl "https://api.nlpcloud.io/v1/<model>/<endpoint>" \

-H "Content-Type: application/json" \

-X POST \

-d '{"text":"John Doe has been working for Microsoft in Seattle since 1999."}'

# Encoding is automatically handled by the library.

# Encoding is automatically handled by the library.

// Encoding is automatically handled by the library.

// Encoding is automatically handled by the library.

// Encoding is automatically handled by the library.

You should send JSON encoded data in POST requests.

Don't forget to set the content-type accordingly: "Content-Type: application/json".

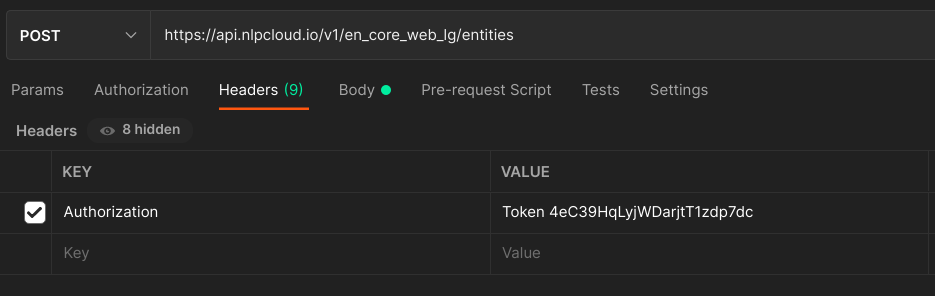

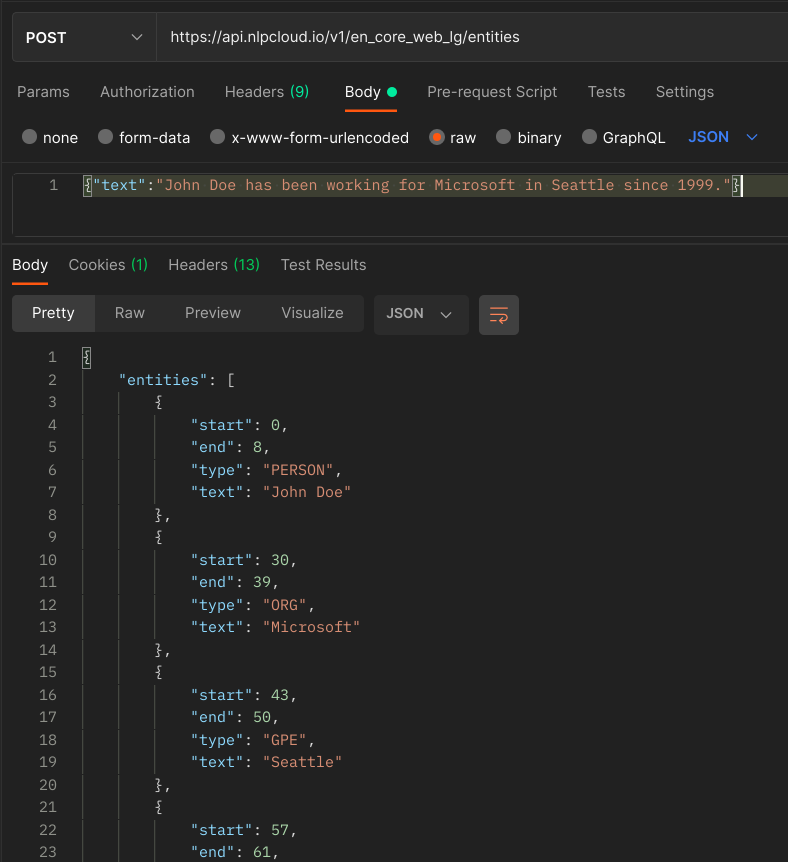

Here is an example using Postman:

Put your JSON data in Body > raw. Note that if your text contains double quotes (") you will need to escape them (using \") in order for your JSON to be properly decoded. This is not needed when using a client library.

Models

Replace

with the right pre-trained model:

curl "https://api.nlpcloud.io/v1/<model>/<endpoint>"

# Set the model during client initialization.

client = nlpcloud.Client("<model>", "<token>")

client = NLPCloud::Client.new('<model>','<token>')

client := nlpcloud.NewClient(&http.Client, "<model>", "<token>", false, "")

const client = new NLPCloudClient({model:'<model>',token:'<token>'})

$client = new \NLPCloud\NLPCloud('<model>','<token>');

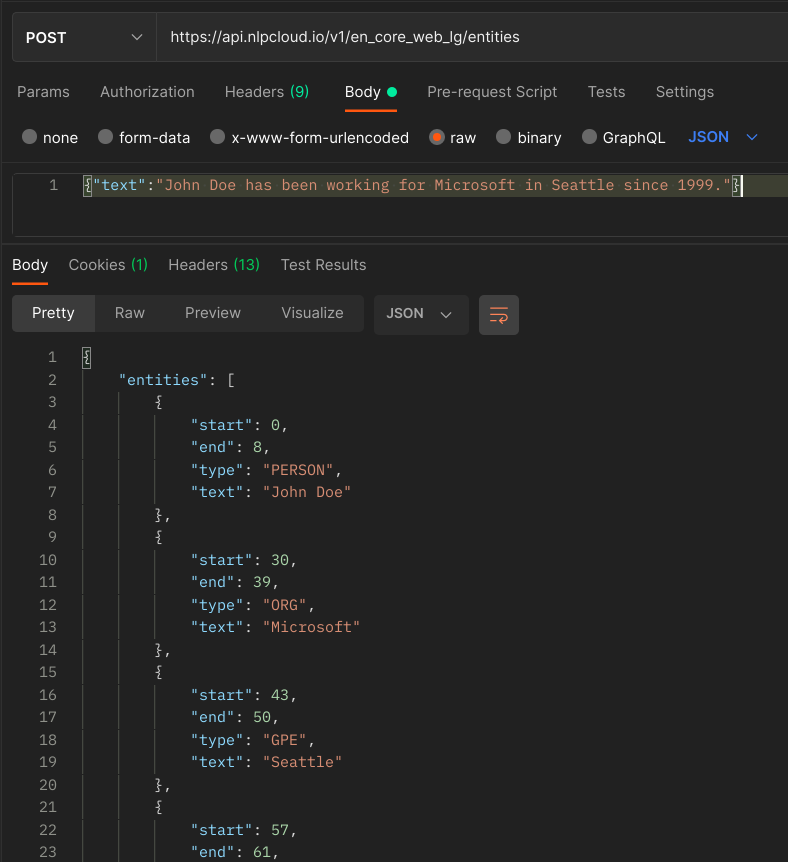

Example: pre-trained spaCy's en_core_web_lg model for Named Entity Recognition (NER):

curl "https://api.nlpcloud.io/v1/en_core_web_lg/entities"

client = nlpcloud.Client("en_core_web_lg", "<token>")

client = NLPCloud::Client.new('en_core_web_lg','<token>')

client := nlpcloud.NewClient(&http.Client, "en_core_web_lg", "<token>", false, "")

const client = new NLPCloudClient({model:'en_core_web_lg',token:'<token>'})

$client = new \NLPCloud\NLPCloud('en_core_web_lg','<your token>');

Example: your private spaCy model with ID 7894 for Named Entity Recognition (NER):

curl "https://api.nlpcloud.io/v1/custom-model/7894/entities"

client = nlpcloud.Client("custom-model/7894", "<token>")

client = NLPCloud::Client.new('custom-model/7894','<token>')

client := nlpcloud.NewClient(&http.Client, "custom-model/7894", "<token>", false, "")

const client = new NLPCloudClient({model:'custom-model/7894',token:'<token>'})

$client = new \NLPCloud\NLPCloud('custom-model/7894','<your token>');

You can use many pre-trained models (see below) for all kinds of AI use cases like sentiment analysis, classification, summarization, text generation, and much more.

You can also use your own private models, in 2 different ways:

- Train/fine-tune and deploy a private model (it happens here in your dashboard)

- Upload and deploy a private model (it happens here in your dashboard).

In case of a private model, your private API endpoint appears in your dashboard once the fine-tuning is finished, or the model upload is finished.

Here is an example on the right performing Named Entity Recognition (NER) with spaCy's pre-trained en_core_web_lg model, and another example doing the same thing with your own private spaCy model with ID 7894.

Models List

Here is a comprehensive list of all the pre-trained models supported by the NLP Cloud API:

| Name | Description | Libraries |

|---|---|---|

| en_core_web_lg: spaCy's English Large | See on spaCy | spaCy v3 |

| fr_core_news_lg: spaCy's French Large | See on spaCy | spaCy v3 |

| zh_core_web_lg: spaCy's Chinese Large | See on spaCy | spaCy v3 |

| da_core_news_lg: spaCy's Danish Large | See on spaCy | spaCy v3 |

| nl_core_news_lg: spaCy's Dutch Large | See on spaCy | spaCy v3 |

| de_core_news_lg: spaCy's German Large | See on spaCy | spaCy v3 |

| el_core_news_lg: spaCy's Greek Large | See on spaCy | spaCy v3 |

| it_core_news_lg: spaCy's Italian Large | See on spaCy | spaCy v3 |

| ja_ginza_electra: Megagon Lab's Ginza | See on Github | spaCy v3 |

| ja_core_news_lg: spaCy's Japanese Large | See on spaCy | spaCy v3 |

| lt_core_news_lg: spaCy's Lithuanian Large | See on spaCy | spaCy v3 |

| nb_core_news_lg: spaCy's Norwegian okmål Large | See on spaCy | spaCy v3 |

| pl_core_news_lg: spaCy's Polish Large | See on spaCy | spaCy v3 |

| pt_core_news_lg: spaCy's Portuguese Large | See on spaCy | spaCy v3 |

| ro_core_news_lg: spaCy's Romanian Large | See on spaCy | spaCy v3 |

| es_core_news_lg: spaCy's Spanish Large | See on spaCy | spaCy v3 |

| bart-large-mnli-yahoo-answers: Joe Davison's Bart Large MNLI Yahoo Answers | See on Hugging Face | PyTorch / Transformers |

| xlm-roberta-large-xnli: Joe Davison's XLM Roberta Large XNLI | See on Hugging Face | PyTorch / Transformers |

| bart-large-cnn: Meta's Bart Large CNN | See on Hugging Face | PyTorch / Transformers |

| t5-base-en-generate-headline: Michal Pleban's T5 Base EN Generate Headline | See on Hugging Face | PyTorch / Transformers |

| distilbert-base-uncased-finetuned-sst-2-english: Distilbert Finetuned SST 2 | See on Hugging Face | PyTorch / Transformers |

| distilbert-base-uncased-emotion: Distilbert Emotion | See on Hugging Face | PyTorch / Transformers |

| finetuned-llama-3-70b: Fine-tuned LLaMA 3 70B | A fine-tuned version of LLaMA 3 70B | PyTorch |

| chatdolphin: ChatDolphin | An in-house NLP Cloud base generative model | PyTorch |

| dolphin-yi-34b: Eric Hartford's Dolphin Yi 34B | See on Hugging Face | PyTorch |

| dolphin-mixtral-8x7b: Eric Hartford's Dolphin Mixtral 8x7B | See on Hugging Face | PyTorch |

| nllb-200-3-3b: Facebook's NLLB 200 3.3B | See on Hugging Face | PyTorch / Transformers |

| paraphrase-multilingual-mpnet-base-v2: Paraphrase Multilingual MPNet Base V2 | See on Hugging Face | PyTorch / Sentence Transformers |

| python-langdetect: Python LangDetect library | See on Pypi | LangDetect |

| speech-t5: Microsoft's Speech T5 | See on Microsoft's repo | Pytorch / Transformers |

| stable-diffusion: Stability AI's Stable Diffusion XL model | See on Hugging Face | PyTorch / Diffusers |

| whisper: OpenAI's Whisper Large | See on OpenAI's repo | PyTorch |

Train/Fine-Tune Your Own Model

See the dedicated fine-tuning section for more details.

Upload Your Own Model Based On HF Transformers

Save your model to disk

model.save_pretrained('saved_model')

You can use your own models based on the Hugging Face Transformers framework.

If your model is publicly available on Hugging Face, you can simply send us the link to the Hugging Face repository.

Alternatively, here are the steps you should follow:

Save your model to disk in a saved_model directory using the .save_pretrained method: model.save_pretrained('saved_model').

Then compress the newly created saved_model directory using Zip.

Finally, upload your Zip file in your dashboard.

If your model comes with a custom script, you can send this script to [email protected], together with any relevant instruction necessary to make your model run. If your model must support custom input or output formats, please let us know so we can adapt the API signature. If we have questions we will let you know.

If you experience difficulties, please contact us so we can assist.

Upload Your spaCy Model

Export in Python script:

nlp.to_disk("/path")

Package:

python -m spacy package /path/to/exported/model /path/to/packaged/model

Archive as .tar.gz:

# Go to /path/to/packaged/model

python setup.py sdist

Or archive as .whl:

# Go to /path/to/packaged/model

python setup.py bdist_wheel

You can use your own spaCy models.

Upload your custom spaCy model in your dashboard, but first you need to export it and package it as a Python module.

Here is what you should do:

- Export your model to disk using the spaCy

to_disk("/path")command. - Package your exported model using the

spacy packagecommand. - Archive your packaged model either as a

.tar.gzarchive usingpython setup.py sdistor as a Python wheel usingpython setup.py bdist_wheel(both formats are accepted). - Retrieve you archive in the newly created

distfolder and upload it in your dashboard.

If your model comes with a custom script, you can send this script to [email protected], together with any relevant instruction necessary to make your model run. If your model must support custom input or output formats, please let us know so we can adapt the API signature. If we have questions we will let you know.

If you experience difficulties, please contact us so we can assist.

Upload Models Based On Other Frameworks

You might deploy NLP models not based on spaCy or Hugging Face Transformers.

Please contact us so we can advise.

GPU

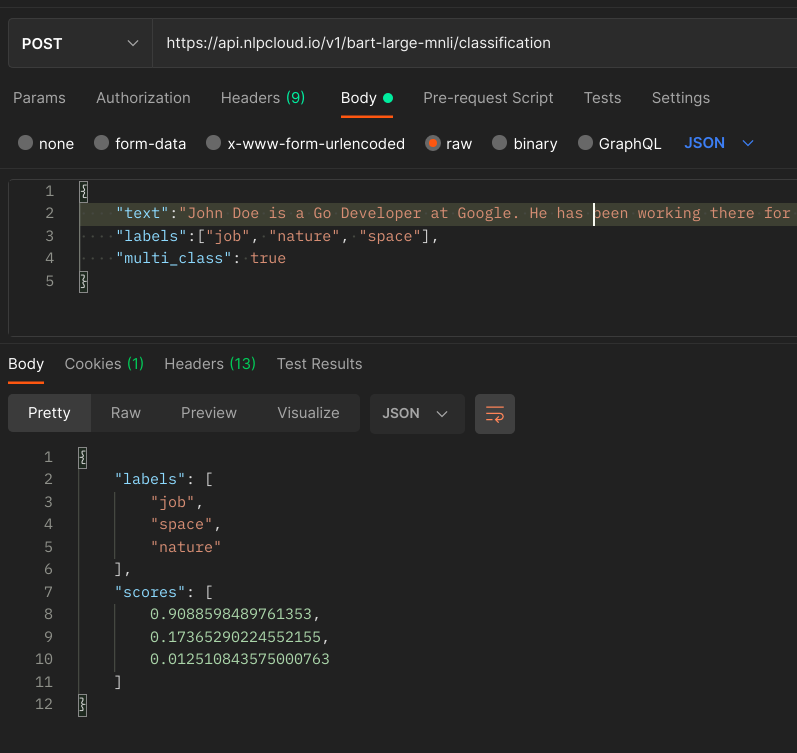

Text classification with Bart Large MNLI Yahoo Answers on GPU

curl "https://api.nlpcloud.io/v1/gpu/bart-large-mnli-yahoo-answers/classification" \

-H "Authorization: Token <token>" \

-H "Content-Type: application/json" \

-X POST \

-d '{

"text":"John Doe is a Go Developer at Google. He has been working there for 10 years and has been awarded employee of the year",

"labels":["job", "nature", "space"],

"multi_class": true

}'

import nlpcloud

client = nlpcloud.Client("bart-large-mnli-yahoo-answers", "<token>", gpu=True)

# Returns a json object.

client.classification("""John Doe is a Go Developer at Google.

He has been working there for 10 years and has been

awarded employee of the year.""",

labels=["job", "nature", "space"],

multi_class=True)

require 'nlpcloud'

client = NLPCloud::Client.new('bart-large-mnli-yahoo-answers','<token>', gpu: true)

# Returns a json object.

client.classification("John Doe is a Go Developer at Google.

He has been working there for 10 years and has been

awarded employee of the year.",

labels: ["job", "nature", "space"],

multi_class: true)

import (

"net/http"

"github.com/nlpcloud/nlpcloud-go"

)

func newTrue() *bool {

b := true

return &b

}

func main() {

client := nlpcloud.NewClient(&http.Client{}, nlpcloud.ClientParams{Model:"bart-large-mnli-yahoo-answers", Token:"<token>",

GPU:true, Lang:"", Async:false})

// Returns a Classification struct.

classes, err := client.Classification(nlpcloud.ClassificationParams{

Text: `John Doe is a Go Developer at Google. He has been working there for

10 years and has been awarded employee of the year.`,

Labels: []string{"job", "nature", "space"},

MultiClass: newTrue(),

})

...

}

const NLPCloudClient = require('nlpcloud');

const client = new NLPCloudClient({model:'bart-large-mnli-yahoo-answers', token:'<token>', gpu:true})

// Returns an Axios promise with the results.

// In case of success, results are contained in `response.data`.

// In case of failure, you can retrieve the status code in `err.response.status`

// and the error message in `err.response.data.detail`.

client.classification({text:`John Doe is a Go Developer at Google.

He has been working there for 10 years and has been

awarded employee of the year.`,

labels:['job', 'nature', 'space'],

multiClass:true}).then(function (response) {

console.log(response.data);

})

.catch(function (err) {

console.error(err.response.status);

console.error(err.response.data.detail);

});

require 'vendor/autoload.php';

use NLPCloud\NLPCloud;

$client = new \NLPCloud\NLPCloud('bart-large-mnli-yahoo-answers','<token>', True);

# Returns a json object.

echo json_encode($client->classification("John Doe is a Go Developer at Google.

He has been working there for 10 years and has been

awarded employee of the year.",

array("job", "nature", "space"),

True));

We recommend that you subscribe to a GPU plan for better performance, especially for real-time applications or for computation-intensive generative models.

In order to use a GPU, simply add gpu in the endpoint URL, after the API version, and before the name of the model.

For example if you want to use the Bart Large MNLI Yahoo Answers classification model on a GPU, you should use the following endpoint:

https://api.nlpcloud.io/v1/gpu/bart-large-mnli-yahoo-answers/classification

See a full example on the right.

Multilingual Add-On

Example: performing French summarization with Bart Large CNN:

curl "https://api.nlpcloud.io/v1/fra_Latn/bart-large-cnn/summarization" \

-H "Authorization: Token <token>" \

-H "Content-Type: application/json" \

-X POST -d '{

"text":"Sur des images aériennes, prises la veille par un vol de surveillance

de la Nouvelle-Zélande, la côte d’une île est bordée d’arbres passés du vert

au gris sous l’effet des retombées volcaniques. On y voit aussi des immeubles

endommagés côtoyer des bâtiments intacts. « D’après le peu d’informations

dont nous disposons, l’échelle de la dévastation pourrait être immense,

spécialement pour les îles les plus isolées », avait déclaré plus tôt

Katie Greenwood, de la Fédération internationale des sociétés de la Croix-Rouge.

Selon l’Organisation mondiale de la santé (OMS), une centaine de maisons ont

été endommagées, dont cinquante ont été détruites sur l’île principale de

Tonga, Tongatapu. La police locale, citée par les autorités néo-zélandaises,

a également fait état de deux morts, dont une Britannique âgée de 50 ans,

Angela Glover, emportée par le tsunami après avoir essayé de sauver les chiens

de son refuge, selon sa famille."

}'

import nlpcloud

client = nlpcloud.Client("bart-large-cnn", "<token>", lang="fra_Latn")

# Returns a json object.

client.summarization("""Sur des images aériennes, prises la veille par un vol de surveillance

de la Nouvelle-Zélande, la côte d’une île est bordée d’arbres passés du vert

au gris sous l’effet des retombées volcaniques. On y voit aussi des immeubles

endommagés côtoyer des bâtiments intacts. « D’après le peu d’informations

dont nous disposons, l’échelle de la dévastation pourrait être immense,

spécialement pour les îles les plus isolées », avait déclaré plus tôt

Katie Greenwood, de la Fédération internationale des sociétés de la Croix-Rouge.

Selon l’Organisation mondiale de la santé (OMS), une centaine de maisons ont

été endommagées, dont cinquante ont été détruites sur l’île principale de

Tonga, Tongatapu. La police locale, citée par les autorités néo-zélandaises,

a également fait état de deux morts, dont une Britannique âgée de 50 ans,

Angela Glover, emportée par le tsunami après avoir essayé de sauver les chiens

de son refuge, selon sa famille.""")

require 'nlpcloud'

client = NLPCloud::Client.new('bart-large-cnn','<token>', lang: 'fr')

# Returns a json object.

client.summarization("Sur des images aériennes, prises la veille par un vol de surveillance

de la Nouvelle-Zélande, la côte d’une île est bordée d’arbres passés du vert

au gris sous l’effet des retombées volcaniques. On y voit aussi des immeubles

endommagés côtoyer des bâtiments intacts. « D’après le peu d’informations

dont nous disposons, l’échelle de la dévastation pourrait être immense,

spécialement pour les îles les plus isolées », avait déclaré plus tôt

Katie Greenwood, de la Fédération internationale des sociétés de la Croix-Rouge.

Selon l’Organisation mondiale de la santé (OMS), une centaine de maisons ont

été endommagées, dont cinquante ont été détruites sur l’île principale de

Tonga, Tongatapu. La police locale, citée par les autorités néo-zélandaises,

a également fait état de deux morts, dont une Britannique âgée de 50 ans,

Angela Glover, emportée par le tsunami après avoir essayé de sauver les chiens

de son refuge, selon sa famille.")

import (

"net/http"

"github.com/nlpcloud/nlpcloud-go"

)

func main() {

client := nlpcloud.NewClient(&http.Client{}, nlpcloud.ClientParams{Model:"bart-large-cnn", Token:"<token>",

GPU:false, Lang:"fra_Latn", Async:false})

// Returns a Summarization struct.

summary, err := client.Summarization(nlpcloud.SummarizationParams{

Text: `Sur des images aériennes, prises la veille par un vol de surveillance

de la Nouvelle-Zélande, la côte d’une île est bordée d’arbres passés du vert

au gris sous l’effet des retombées volcaniques. On y voit aussi des immeubles

endommagés côtoyer des bâtiments intacts. « D’après le peu d’informations

dont nous disposons, l’échelle de la dévastation pourrait être immense,

spécialement pour les îles les plus isolées », avait déclaré plus tôt

Katie Greenwood, de la Fédération internationale des sociétés de la Croix-Rouge.

Selon l’Organisation mondiale de la santé (OMS), une centaine de maisons ont

été endommagées, dont cinquante ont été détruites sur l’île principale de

Tonga, Tongatapu. La police locale, citée par les autorités néo-zélandaises,

a également fait état de deux morts, dont une Britannique âgée de 50 ans,

Angela Glover, emportée par le tsunami après avoir essayé de sauver les chiens

de son refuge, selon sa famille.`,

})

...

}

const NLPCloudClient = require('nlpcloud');

const client = new NLPCloudClient({model:'bart-large-cnn','<token>', lang:'fr'})

// Returns an Axios promise with the results.

// In case of success, results are contained in `response.data`.

// In case of failure, you can retrieve the status code in `err.response.status`

// and the error message in `err.response.data.detail`.

client.summarization({text:`Sur des images aériennes, prises la veille par un vol de surveillance

de la Nouvelle-Zélande, la côte d’une île est bordée d’arbres passés du vert

au gris sous l’effet des retombées volcaniques. On y voit aussi des immeubles

endommagés côtoyer des bâtiments intacts. « D’après le peu d’informations

dont nous disposons, l’échelle de la dévastation pourrait être immense,

spécialement pour les îles les plus isolées », avait déclaré plus tôt

Katie Greenwood, de la Fédération internationale des sociétés de la Croix-Rouge.

Selon l’Organisation mondiale de la santé (OMS), une centaine de maisons ont

été endommagées, dont cinquante ont été détruites sur l’île principale de

Tonga, Tongatapu. La police locale, citée par les autorités néo-zélandaises,

a également fait état de deux morts, dont une Britannique âgée de 50 ans,

Angela Glover, emportée par le tsunami après avoir essayé de sauver les chiens

de son refuge, selon sa famille.`}).then(function (response) {

console.log(response.data);

})

.catch(function (err) {

console.error(err.response.status);

console.error(err.response.data.detail);

});

require 'vendor/autoload.php';

use NLPCloud\NLPCloud;

$client = new \NLPCloud\NLPCloud('bart-large-cnn','<token>', False, 'fr');

# Returns a json object.

echo json_encode($client->summarization("Sur des images aériennes, prises la veille par un vol de surveillance

de la Nouvelle-Zélande, la côte d’une île est bordée d’arbres passés du vert

au gris sous l’effet des retombées volcaniques. On y voit aussi des immeubles

endommagés côtoyer des bâtiments intacts. « D’après le peu d’informations

dont nous disposons, l’échelle de la dévastation pourrait être immense,

spécialement pour les îles les plus isolées », avait déclaré plus tôt

Katie Greenwood, de la Fédération internationale des sociétés de la Croix-Rouge.

Selon l’Organisation mondiale de la santé (OMS), une centaine de maisons ont

été endommagées, dont cinquante ont été détruites sur l’île principale de

Tonga, Tongatapu. La police locale, citée par les autorités néo-zélandaises,

a également fait état de deux morts, dont une Britannique âgée de 50 ans,

Angela Glover, emportée par le tsunami après avoir essayé de sauver les chiens

de son refuge, selon sa famille."));

Output:

{"summary_text": "Selon l'organisation mondiale de la santé, une centaine

de maisons ont été endommagées, dont 50 détruites sur l'île principale

de Tonga, Tongatapu. La police locale, citée par les autorités néo-zélandaises,

a également fait état de deux morts, dont une femme britannique de 50 ans,

Angela Glover. Glover a été emportée par le tsunami après avoir tenté

de sauver des chiens de son refuge."}

Example: performing French summarization with Bart Large CNN on GPU:

curl "https://api.nlpcloud.io/v1/gpu/fra_Latn/bart-large-cnn/summarization" \

-H "Authorization: Token <token>" \

-H "Content-Type: application/json" \

-X POST -d '{

"text":"Sur des images aériennes, prises la veille par un vol de surveillance

de la Nouvelle-Zélande, la côte d’une île est bordée d’arbres passés du vert

au gris sous l’effet des retombées volcaniques. On y voit aussi des immeubles

endommagés côtoyer des bâtiments intacts. « D’après le peu d’informations

dont nous disposons, l’échelle de la dévastation pourrait être immense,

spécialement pour les îles les plus isolées », avait déclaré plus tôt

Katie Greenwood, de la Fédération internationale des sociétés de la Croix-Rouge.

Selon l’Organisation mondiale de la santé (OMS), une centaine de maisons ont

été endommagées, dont cinquante ont été détruites sur l’île principale de

Tonga, Tongatapu. La police locale, citée par les autorités néo-zélandaises,

a également fait état de deux morts, dont une Britannique âgée de 50 ans,

Angela Glover, emportée par le tsunami après avoir essayé de sauver les chiens

de son refuge, selon sa famille."

}'

import nlpcloud

client = nlpcloud.Client("<model_name>", "<token>", gpu=True, lang="fra_Latn")

# Returns a json object.

client.summarization("""Sur des images aériennes, prises la veille par un vol de surveillance

de la Nouvelle-Zélande, la côte d’une île est bordée d’arbres passés du vert

au gris sous l’effet des retombées volcaniques. On y voit aussi des immeubles

endommagés côtoyer des bâtiments intacts. « D’après le peu d’informations

dont nous disposons, l’échelle de la dévastation pourrait être immense,

spécialement pour les îles les plus isolées », avait déclaré plus tôt

Katie Greenwood, de la Fédération internationale des sociétés de la Croix-Rouge.

Selon l’Organisation mondiale de la santé (OMS), une centaine de maisons ont

été endommagées, dont cinquante ont été détruites sur l’île principale de

Tonga, Tongatapu. La police locale, citée par les autorités néo-zélandaises,

a également fait état de deux morts, dont une Britannique âgée de 50 ans,

Angela Glover, emportée par le tsunami après avoir essayé de sauver les chiens

de son refuge, selon sa famille.""")

require 'nlpcloud'

client = NLPCloud::Client.new('<model_name>','<token>', gpu: true, lang: 'fr')

# Returns a json object.

client.summarization("Sur des images aériennes, prises la veille par un vol de surveillance

de la Nouvelle-Zélande, la côte d’une île est bordée d’arbres passés du vert

au gris sous l’effet des retombées volcaniques. On y voit aussi des immeubles

endommagés côtoyer des bâtiments intacts. « D’après le peu d’informations

dont nous disposons, l’échelle de la dévastation pourrait être immense,

spécialement pour les îles les plus isolées », avait déclaré plus tôt

Katie Greenwood, de la Fédération internationale des sociétés de la Croix-Rouge.

Selon l’Organisation mondiale de la santé (OMS), une centaine de maisons ont

été endommagées, dont cinquante ont été détruites sur l’île principale de

Tonga, Tongatapu. La police locale, citée par les autorités néo-zélandaises,

a également fait état de deux morts, dont une Britannique âgée de 50 ans,

Angela Glover, emportée par le tsunami après avoir essayé de sauver les chiens

de son refuge, selon sa famille.")

import (

"net/http"

"github.com/nlpcloud/nlpcloud-go"

)

func main() {

client := nlpcloud.NewClient(&http.Client{}, nlpcloud.ClientParams{Model:"<model_name>", Token:"<token>",

GPU:true, Lang:"fra_Latn", Async:false})

// Returns a Summarization struct.

summary, err := client.Summarization(nlpcloud.SummarizationParams{

Text: `Sur des images aériennes, prises la veille par un vol de surveillance

de la Nouvelle-Zélande, la côte d’une île est bordée d’arbres passés du vert

au gris sous l’effet des retombées volcaniques. On y voit aussi des immeubles

endommagés côtoyer des bâtiments intacts. « D’après le peu d’informations

dont nous disposons, l’échelle de la dévastation pourrait être immense,

spécialement pour les îles les plus isolées », avait déclaré plus tôt

Katie Greenwood, de la Fédération internationale des sociétés de la Croix-Rouge.

Selon l’Organisation mondiale de la santé (OMS), une centaine de maisons ont

été endommagées, dont cinquante ont été détruites sur l’île principale de

Tonga, Tongatapu. La police locale, citée par les autorités néo-zélandaises,

a également fait état de deux morts, dont une Britannique âgée de 50 ans,

Angela Glover, emportée par le tsunami après avoir essayé de sauver les chiens

de son refuge, selon sa famille.`,

})

...

}

const NLPCloudClient = require('nlpcloud');

const client = new NLPCloudClient({model:'<model_name>', token:'<token>', gpu:true, lang:'fra_Latn'})

// Returns an Axios promise with the results.

// In case of success, results are contained in `response.data`.

// In case of failure, you can retrieve the status code in `err.response.status`

// and the error message in `err.response.data.detail`.

client.summarization({text:`Sur des images aériennes, prises la veille par un vol de surveillance

de la Nouvelle-Zélande, la côte d’une île est bordée d’arbres passés du vert

au gris sous l’effet des retombées volcaniques. On y voit aussi des immeubles

endommagés côtoyer des bâtiments intacts. « D’après le peu d’informations

dont nous disposons, l’échelle de la dévastation pourrait être immense,

spécialement pour les îles les plus isolées », avait déclaré plus tôt

Katie Greenwood, de la Fédération internationale des sociétés de la Croix-Rouge.

Selon l’Organisation mondiale de la santé (OMS), une centaine de maisons ont

été endommagées, dont cinquante ont été détruites sur l’île principale de

Tonga, Tongatapu. La police locale, citée par les autorités néo-zélandaises,

a également fait état de deux morts, dont une Britannique âgée de 50 ans,

Angela Glover, emportée par le tsunami après avoir essayé de sauver les chiens

de son refuge, selon sa famille.`}).then(function (response) {

console.log(response.data);

})

.catch(function (err) {

console.error(err.response.status);

console.error(err.response.data.detail);

});

require 'vendor/autoload.php';

use NLPCloud\NLPCloud;

$client = new \NLPCloud\NLPCloud('<model_name>','<token>', True, 'fr');

# Returns a json object.

echo json_encode($client->summarization("Sur des images aériennes, prises la veille par un vol de surveillance

de la Nouvelle-Zélande, la côte d’une île est bordée d’arbres passés du vert

au gris sous l’effet des retombées volcaniques. On y voit aussi des immeubles

endommagés côtoyer des bâtiments intacts. « D’après le peu d’informations

dont nous disposons, l’échelle de la dévastation pourrait être immense,

spécialement pour les îles les plus isolées », avait déclaré plus tôt

Katie Greenwood, de la Fédération internationale des sociétés de la Croix-Rouge.

Selon l’Organisation mondiale de la santé (OMS), une centaine de maisons ont

été endommagées, dont cinquante ont été détruites sur l’île principale de

Tonga, Tongatapu. La police locale, citée par les autorités néo-zélandaises,

a également fait état de deux morts, dont une Britannique âgée de 50 ans,

Angela Glover, emportée par le tsunami après avoir essayé de sauver les chiens

de son refuge, selon sa famille."));

Output:

{"summary_text": "Selon l'organisation mondiale de la santé, une centaine

de maisons ont été endommagées, dont 50 détruites sur l'île principale

de Tonga, Tongatapu. La police locale, citée par les autorités néo-zélandaises,

a également fait état de deux morts, dont une femme britannique de 50 ans,

Angela Glover. Glover a été emportée par le tsunami après avoir tenté

de sauver des chiens de son refuge."}

AI models do not always work well with non-English languages.

We do our best to add non-English models when it's possible. See for example Fine-tuned LLaMA 3 70B, XLM Roberta Large XNLI, Paraphrase Multilingual MPNet Base V2, or spaCy. Unfortunately not all the models are good at handling non-English languages.

In order to solve this challenge, we developed a multilingual module that automatically translates your input into English, performs the actual natural language processing task, and then translates the result back to your original language. It makes your requests a bit slower but returns good results in many languages.

Even for models that natively understand non-English languages, they actually sometimes work better with the multilingual addon.

This multilingual add-on is free of charge and automatically used when you add a language code in your URL.

Simply add your language code in the endpoint URL, after the API version and before the name of the model: https://api.nlpcloud.io/v1/{language code}/{model}

If you are using a GPU, add your language code after the GPU, and before the name of the model: https://api.nlpcloud.io/v1/gpu/{language code}/{model}

For example, here is the endpoint you should use for summarization of French text with Bart Large CNN: https://api.nlpcloud.io/v1/fra_Latn/bart-large-cnn/summarization. And here is the endpoint you should use for summarization of French text with Bart Large CNN on GPU: https://api.nlpcloud.io/v1/fra_Latn/bart-large-cnn/summarization.

Here is the full list of supported language codes:

| Language | Code |

|---|---|

| Acehnese (Arabic script) | ace_Arab |

| Acehnese (Latin script) | ace_Latn |

| Mesopotamian Arabic | acm_Arab |

| Ta’izzi-Adeni Arabic | acq_Arab |

| Tunisian Arabic | aeb_Arab |

| Afrikaans | afr_Latn |

| South Levantine Arabic | ajp_Arab |

| Akan | aka_Latn |

| Amharic | amh_Ethi |

| North Levantine Arabic | apc_Arab |

| Modern Standard Arabic | arb_Arab |

| Modern Standard Arabic (Romanized) | arb_Latn |

| Najdi Arabic | ars_Arab |

| Moroccan Arabic | ary_Arab |

| Egyptian Arabic | arz_Arab |

| Assamese | asm_Beng |

| Asturian | ast_Latn |

| Awadhi | awa_Deva |

| Central Aymara | ayr_Latn |

| South Azerbaijani | azb_Arab |

| North Azerbaijani | azj_Latn |

| Bashkir | bak_Cyrl |

| Bambara | bam_Latn |

| Balinese | ban_Latn |

| Belarusian | bel_Cyrl |

| Bemba | bem_Latn |

| Bengali | ben_Beng |

| Bhojpuri | bho_Deva |

| Banjar (Arabic script) | bjn_Arab |

| Banjar (Latin script) | bjn_Latn |

| Standard Tibetan | bod_Tibt |

| Bosnian | bos_Latn |

| Buginese | bug_Latn |

| Bulgarian | bul_Cyrl |

| Catalan | cat_Latn |

| Cebuano | ceb_Latn |

| Czech | ces_Latn |

| Chokwe | cjk_Latn |

| Central Kurdish | ckb_Arab |

| Crimean Tatar | crh_Latn |

| Welsh | cym_Latn |

| Danish | dan_Latn |

| German | deu_Latn |

| Southwestern Dinka | dik_Latn |

| Dyula | dyu_Latn |

| Dzongkha | dzo_Tibt |

| Greek | ell_Grek |

| Esperanto | epo_Latn |

| Estonian | est_Latn |

| Basque | eus_Latn |

| Ewe | ewe_Latn |

| Faroese | fao_Latn |

| Fijian | fij_Latn |

| Finnish | fin_Latn |

| Fon | fon_Latn |

| French | fra_Latn |

| Friulian | fur_Latn |

| Nigerian Fulfulde | fuv_Latn |

| Scottish Gaelic | gla_Latn |

| Irish | gle_Latn |

| Galician | glg_Latn |

| Guarani | grn_Latn |

| Gujarati | guj_Gujr |

| Haitian Creole | hat_Latn |

| Hausa | hau_Latn |

| Hebrew | heb_Hebr |

| Hindi | hin_Deva |

| Chhattisgarhi | hne_Deva |

| Croatian | hrv_Latn |

| Hungarian | hun_Latn |

| Armenian | hye_Armn |

| Igbo | ibo_Latn |

| Ilocano | ilo_Latn |

| Indonesian | ind_Latn |

| Icelandic | isl_Latn |

| Italian | ita_Latn |

| Javanese | jav_Latn |

| Japanese | jpn_Jpan |

| Kabyle | kab_Latn |

| Jingpho | kac_Latn |

| Kamba | kam_Latn |

| Kannada | kan_Knda |

| Kashmiri (Arabic script) | kas_Arab |

| Kashmiri (Devanagari script) | kas_Deva |

| Georgian | kat_Geor |

| Central Kanuri (Arabic script) | knc_Arab |

| Central Kanuri (Latin script) | knc_Latn |

| Kazakh | kaz_Cyrl |

| Kabiyè | kbp_Latn |

| Kabuverdianu | kea_Latn |

| Khmer | khm_Khmr |

| Kikuyu | kik_Latn |

| Kinyarwanda | kin_Latn |

| Kyrgyz | kir_Cyrl |

| Kimbundu | kmb_Latn |

| Northern Kurdish | kmr_Latn |

| Kikongo | kon_Latn |

| Korean | kor_Hang |

| Lao | lao_Laoo |

| Ligurian | lij_Latn |

| Limburgish | lim_Latn |

| Lingala | lin_Latn |

| Lithuanian | lit_Latn |

| Lombard | lmo_Latn |

| Latgalian | ltg_Latn |

| Luxembourgish | ltz_Latn |

| Luba-Kasai | lua_Latn |

| Ganda | lug_Latn |

| Luo | luo_Latn |

| Mizo | lus_Latn |

| Standard Latvian | lvs_Latn |

| Magahi | mag_Deva |

| Maithili | mai_Deva |

| Malayalam | mal_Mlym |

| Marathi | mar_Deva |

| Minangkabau (Arabic script) | min_Arab |

| Minangkabau (Latin script) | min_Latn |

| Macedonian | mkd_Cyrl |

| Plateau Malagasy | plt_Latn |

| Maltese | mlt_Latn |

| Meitei (Bengali script) | mni_Beng |

| Halh Mongolian | khk_Cyrl |

| Mossi | mos_Latn |

| Maori | mri_Latn |

| Burmese | mya_Mymr |

| Dutch | nld_Latn |

| Norwegian Nynorsk | nno_Latn |

| Norwegian Bokmål | nob_Latn |

| Nepali | npi_Deva |

| Northern Sotho | nso_Latn |

| Nuer | nus_Latn |

| Nyanja | nya_Latn |

| Occitan | oci_Latn |

| West Central Oromo | gaz_Latn |

| Odia | ory_Orya |

| Pangasinan | pag_Latn |

| Eastern Panjabi | pan_Guru |

| Papiamento | pap_Latn |

| Western Persian | pes_Arab |

| Polish | pol_Latn |

| Portuguese | por_Latn |

| Dari | prs_Arab |

| Southern Pashto | pbt_Arab |

| Ayacucho Quechua | quy_Latn |

| Romanian | ron_Latn |

| Rundi | run_Latn |

| Russian | rus_Cyrl |

| Sango | sag_Latn |

| Sanskrit | san_Deva |

| Santali | sat_Olck |

| Sicilian | scn_Latn |

| Shan | shn_Mymr |

| Sinhala | sin_Sinh |

| Slovak | slk_Latn |

| Slovenian | slv_Latn |

| Samoan | smo_Latn |

| Shona | sna_Latn |

| Sindhi | snd_Arab |

| Somali | som_Latn |

| Southern Sotho | sot_Latn |

| Spanish | spa_Latn |

| Tosk Albanian | als_Latn |

| Sardinian | srd_Latn |

| Serbian | srp_Cyrl |

| Swati | ssw_Latn |

| Sundanese | sun_Latn |

| Swedish | swe_Latn |

| Swahili | swh_Latn |

| Silesian | szl_Latn |

| Tamil | tam_Taml |

| Tatar | tat_Cyrl |

| Telugu | tel_Telu |

| Tajik | tgk_Cyrl |

| Tagalog | tgl_Latn |

| Thai | tha_Thai |

| Tigrinya | tir_Ethi |

| Tamasheq (Latin script) | taq_Latn |

| Tamasheq (Tifinagh script) | taq_Tfng |

| Tok Pisin | tpi_Latn |

| Tswana | tsn_Latn |

| Tsonga | tso_Latn |

| Turkmen | tuk_Latn |

| Tumbuka | tum_Latn |

| Turkish | tur_Latn |

| Twi | twi_Latn |

| Central Atlas Tamazight | tzm_Tfng |

| Uyghur | uig_Arab |

| Ukrainian | ukr_Cyrl |

| Umbundu | umb_Latn |

| Urdu | urd_Arab |

| Northern Uzbek | uzn_Latn |

| Venetian | vec_Latn |

| Vietnamese | vie_Latn |

| Waray | war_Latn |

| Wolof | wol_Latn |

| Xhosa | xho_Latn |

| Eastern Yiddish | ydd_Hebr |

| Yoruba | yor_Latn |

| Yue Chinese | yue_Hant |

| Chinese (Simplified) | zho_Hans |

| Chinese (Traditional) | zho_Hant |

| Standard Malay | zsm_Latn |

| Zulu | zul_Latn |

The multilingual add-on can be used with the following endpoints:

- chatbot (chatbot and conversational AI)

- classification (text classification)

- generation (text generation)

- gs-correction (grammar and spelling correction)

- intent-classification (intent classification)

- image-generation (image generation)

- kw-kp-extraction (keywords and keyphrases extraction)

- paraphrasing (paraphrasing / rewriting)

- question (question answering)

- semantic-search (semantic search)

- sentiment-analysis (sentiment analysis / emotion analysis)

- summarization (text summarization)

Asynchronous Mode

Input (1st request):

curl "https://api.nlpcloud.io/v1/gpu/async/whisper/asr" \

-H "Authorization: Token <token>" \

-H "Content-Type: application/json" \

-X POST -d '{"url":"https://ia801405.us.archive.org/17/items/children_at_play_2210.poem_librivox/childrenatplay_davies_ah_64kb.mp3"}'

import nlpcloud

client = nlpcloud.Client("whisper", "<token>", gpu=True, asynchronous=True)

client.asr("https://ia801405.us.archive.org/17/items/children_at_play_2210.poem_librivox/childrenatplay_davies_ah_64kb.mp3")

require 'nlpcloud'

client = NLPCloud::Client.new('whisper','<token>', gpu: true, asynchronous: true)

client.asr(url:"https://ia801405.us.archive.org/17/items/children_at_play_2210.poem_librivox/childrenatplay_davies_ah_64kb.mp3")

import (

"net/http"

"github.com/nlpcloud/nlpcloud-go"

)

func main() {

client := nlpcloud.NewClient(&http.Client{}, nlpcloud.ClientParams{Model:"<model_name>", Token:"<token>",

GPU:true, Lang:"", Async:true})

asr, err := client.asr(nlpcloud.ASRParams{

URL: "https://ia801405.us.archive.org/17/items/children_at_play_2210.poem_librivox/childrenatplay_davies_ah_64kb.mp3",

})

...

}

const NLPCloudClient = require('nlpcloud');

const client = new NLPCloudClient({model:'whisper',token:'<token>',gpu:true,async:true})

// Returns an Axios promise with the results.

// In case of success, results are contained in `response.data`.

// In case of failure, you can retrieve the status code in `err.response.status`

// and the error message in `err.response.data.detail`.

client.asr({url:'https://ia801405.us.archive.org/17/items/children_at_play_2210.poem_librivox/childrenatplay_davies_ah_64kb.mp3'}).then(function (response) {

console.log(response.data);

})

.catch(function (err) {

console.error(err.response.status);

console.error(err.response.data.detail);

});

require 'vendor/autoload.php';

use NLPCloud\NLPCloud;

$client = new \NLPCloud\NLPCloud('whisper','<token>',True,'',True);

echo json_encode($client->asr("https://ia801405.us.archive.org/17/items/children_at_play_2210.poem_librivox/childrenatplay_davies_ah_64kb.mp3"));

Output (returns a 202 HTTP code):

{"url":"https://api.nlpcloud.io/v1/get-async-result/86cfbf00-f442-40b1-bb89-275d7d32fd48"}

Some AI models can take a long time to return. It is impractical to use these models through an API in a synchronous way. The solution is to ask for an asynchronous response. In that case your request will be processed in the background, and you will have to poll the result on a regular basis until it is ready.

When used in asynchronous mode, our AI models accept much larger inputs.

In order to use this mode, you should add /async/ to the url after the GPU (if you use a GPU) and before the language (if you use the multilingual addon). Here are some examples:

- If you use a GPU but not the multilingual addon:

https://api.nlpcloud.io/v1/gpu/async/{model} - If you use a GPU and the French language with the multilingual addon:

https://api.nlpcloud.io/v1/gpu/async/fra_Latn/{model} - If you don't use a GPU and don'use the multilingual addon:

https://api.nlpcloud.io/v1/async/{model} - If you don't use a GPU and use the French language with the multilingual addon:

https://api.nlpcloud.io/v1/async/fr_Latn/{model}

It instantly returns a URL (with HTTP code 202) containing a unique ID that you should use to retrieve your result once it is ready. You should poll this URL on a regular basis until the result is ready. If the result is not ready, an HTTP code 202 will be returned. If it is ready, an HTTP code 200 will be returned. It is good practice to poll the result URL every 10 seconds.

Input (2nd request):

curl "https://api.nlpcloud.io/v1/get-async-result/<your unique ID>" \

-H "Authorization: Token <token>"

# Returns a json object.

client.async_result("https://api.nlpcloud.io/v1/get-async-result/<your unique ID>")

client.async_result("https://api.nlpcloud.io/v1/get-async-result/<your unique ID>")

asr, err := client.asr(nlpcloud.AsyncResultParams{

URL: "https://api.nlpcloud.io/v1/get-async-result/<your unique ID>",

})

client.asyncResult('https://api.nlpcloud.io/v1/get-async-result/<your unique ID>').then(function (response) {

console.log(response.data);

})

.catch(function (err) {

console.error(err.response.status);

console.error(err.response.data.detail);

});

echo json_encode($client->asyncResult("https://api.nlpcloud.io/v1/get-async-result/<your unique ID>"));

Output (returns a 202 HTTP code with an empty body if not ready, or a 200 HTTP code with the result if ready):

{

"created_on":"2022-11-18T15:56:16.536025Z",

"request_body":"{\"url\":\"https://ia801405.us.archive.org/17/items/children_at_play_2210.poem_librivox/childrenatplay_davies_ah_64kb.mp3\"}",

"finished_on":"2022-11-18T15:56:29.393898Z",

"http_code":200,

"error_detail":"",

"content":"{\"text\":\" CHILDREN AT PLAY by William Henry Davies Read for LibriVox.org by Anita Hibbard, September 27, 2022 I hear a merry noise indeed. Is it the geese and ducks that take their first plunge in a quiet pond, that into scores of ripples break? Or children make this merry sound. I see an oak tree, its strong back could not be bent an inch, though all its leaves were stone, or iron even. A boy, with many a lusty call, rides on a bough bareback through heaven. I see two children dig a hole, and plant in it a cherry stone. We'll come tomorrow, one child said, and then the tree will be full grown and all its boughs have cherries red. Ah, children, what a life to lead! You love the flowers, but when they're past, no flowers are missed by your bright eyes, and when cold winter comes at last, snowflakes shall be your butterflies.\",\"duration\":82,\"language\":\"en\",\"segments\":[{\"id\":0,\"start\":0.0,\"end\":8.94,\"text\":\" CHILDREN AT PLAY by William Henry Davies Read for LibriVox.org by Anita Hibbard, September\"},{\"id\":1,\"start\":8.94,\"end\":11.76,\"text\":\" 27, 2022\"},{\"id\":2,\"start\":11.76,\"end\":14.8,\"text\":\" I hear a merry noise indeed.\"},{\"id\":3,\"start\":14.8,\"end\":21.04,\"text\":\" Is it the geese and ducks that take their first plunge in a quiet pond, that into scores\"},{\"id\":4,\"start\":21.04,\"end\":22.96,\"text\":\" of ripples break?\"},{\"id\":5,\"start\":22.96,\"end\":26.04,\"text\":\" Or children make this merry sound.\"},{\"id\":6,\"start\":26.04,\"end\":32.64,\"text\":\" I see an oak tree, its strong back could not be bent an inch, though all its leaves were\"},{\"id\":7,\"start\":32.64,\"end\":35.04,\"text\":\" stone, or iron even.\"},{\"id\":8,\"start\":35.04,\"end\":41.84,\"text\":\" A boy, with many a lusty call, rides on a bough bareback through heaven.\"},{\"id\":9,\"start\":41.84,\"end\":46.8,\"text\":\" I see two children dig a hole, and plant in it a cherry stone.\"},{\"id\":10,\"start\":46.8,\"end\":53.68,\"text\":\" We'll come tomorrow, one child said, and then the tree will be full grown and all its boughs\"},{\"id\":11,\"start\":53.68,\"end\":56.0,\"text\":\" have cherries red.\"},{\"id\":12,\"seek\":5600,\"start\":56.0,\"end\":59.76,\"text\":\" Ah, children, what a life to lead!\"},{\"id\":13,\"seek\":5600,\"start\":59.76,\"end\":66.24,\"text\":\" You love the flowers, but when they're past, no flowers are missed by your bright eyes,\"},{\"id\":14,\"seek\":6624,\"start\":66.24,\"end\":91.56,\"text\":\" and when cold winter comes at last, snowflakes shall be your butterflies.\"}]}"

}

The asynchronous mode can be used with the following endpoints:

- asr (automatic speech recognition): the maximum input audio/video length is 60,000 seconds in asynchronous mode, while it is 200 seconds in synchronous mode

- entities (entity extraction): the maximum input text is 1 million tokens in asynchronous mode, while in synchronous mode it is 256 tokens in synchronous mode. Under the hood, your input text is automatically split into several smaller requests, and then the result is reassembled after the fact.

- gs-correction (grammar and spelling correction): the maximum input text is 1 million tokens in asynchronous mode, while in synchronous mode it is 256 tokens in synchronous mode. Under the hood, your input text is automatically split into several smaller requests, and then the result is reassembled after the fact.

- kw-kp-extraction (keywords and keyphrases extraction): the maximum input text is 1 million tokens in asynchronous mode, while in synchronous mode it is 1024 tokens in synchronous mode. Under the hood, your input text is automatically split into several smaller requests, and then the result is reassembled after the fact.

- paraphrasing (paraphrasing and rewriting): the maximum input text is 1 million tokens in asynchronous mode, while it is 256 tokens in synchronous mode. Under the hood, your input text is automatically split into several smaller requests, and then the result is reassembled after the fact.

- summarization (text summarization): the maximum input text is 1 million tokens in asynchronous mode, while in synchronous mode it is 1024 tokens for Bart Large and GPT, and 8192 tokens for T5 Base Headline Generation. Under the hood, your input text is automatically split into several smaller requests, and then the result is reassembled after the fact.

- translation (translation): the maximum input text is 1 million tokens in asynchronous mode, while it is 256 tokens in synchronous mode. Under the hood, your input text is automatically split into several smaller requests, and then the result is reassembled after the fact.

First HTTP Request

POST https://api.nlpcloud.io/v1/<gpu if any>/<language if any>/async/<model>/<endpoint>

POST Values

Should contain the same values as the underlying endpoint you are trying to use.

Output

This endpoint returns a JSON object containing the following elements:

| Key | Type | Description |

|---|---|---|

url |

string | The url that you should poll to get the final result |

Second HTTP Request

GET https://api.nlpcloud.io/v1/get-async-result/<your unique ID>

Output

This endpoint returns a JSON object containing the following elements:

| Key | Type | Description |

|---|---|---|

created_on |

datetime | The date and time of your initial request |

request_body |

string | The content of your initial request |

finished_on |

datetime | The date and time when your request was completed |

http_code |

int | The HTTP code returned by the AI model |

error_detail |

string | The error returned by the AI model if any |

content |

string | The response returned by the AI model |

Endpoints

Automatic Speech Recognition

Input:

curl "https://api.nlpcloud.io/v1/gpu/whisper/asr" \

-H "Authorization: Token <token>" \

-H "Content-Type: application/json" \

-X POST -d '{"url":"https://ia801405.us.archive.org/17/items/children_at_play_2210.poem_librivox/childrenatplay_davies_ah_64kb.mp3"}'

import nlpcloud

client = nlpcloud.Client("whisper", "<token>", True)

# Returns a json object.

client.asr("https://ia801405.us.archive.org/17/items/children_at_play_2210.poem_librivox/childrenatplay_davies_ah_64kb.mp3")

require 'nlpcloud'

client = NLPCloud::Client.new('whisper','<token>', gpu: true)

# Returns a json object.

client.asr(url:"https://ia801405.us.archive.org/17/items/children_at_play_2210.poem_librivox/childrenatplay_davies_ah_64kb.mp3")

import (

"net/http"

"github.com/nlpcloud/nlpcloud-go"

)

func main() {

client := nlpcloud.NewClient(&http.Client{}, nlpcloud.ClientParams{Model:"<model_name>", Token:"<token>",

GPU:true, Lang:"", Async:false})

// Returns an ASR struct.

asr, err := client.asr(nlpcloud.ASRParams{

URL: "https://ia801405.us.archive.org/17/items/children_at_play_2210.poem_librivox/childrenatplay_davies_ah_64kb.mp3",

})

...

}

const NLPCloudClient = require('nlpcloud');

const client = new NLPCloudClient({model:'whisper',token:'<token>',gpu:true})

// Returns an Axios promise with the results.

// In case of success, results are contained in `response.data`.

// In case of failure, you can retrieve the status code in `err.response.status`

// and the error message in `err.response.data.detail`.

client.asr({url:'https://ia801405.us.archive.org/17/items/children_at_play_2210.poem_librivox/childrenatplay_davies_ah_64kb.mp3'}).then(function (response) {

console.log(response.data);

})

.catch(function (err) {

console.error(err.response.status);

console.error(err.response.data.detail);

});

require 'vendor/autoload.php';

use NLPCloud\NLPCloud;

$client = new \NLPCloud\NLPCloud('whisper','<token>',True);

# Returns a json object.

echo json_encode($client->asr("https://ia801405.us.archive.org/17/items/children_at_play_2210.poem_librivox/childrenatplay_davies_ah_64kb.mp3"));

Output:

{

"text": " CHILDREN AT PLAY by William Henry Davies Read for LibriVox.org by Anita Hibbard, September 27, 2022 I hear a merry noise indeed. Is it the geese and ducks that take their first plunge in a quiet pond, that into scores of ripples break? Or children make this merry sound. I see an oak tree, its strong back could not be bent an inch, though all its leaves were stone, or iron even. A boy, with many a lusty call, rides on a bough bareback through heaven. I see two children dig a hole, and plant in it a cherry stone. We'll come tomorrow, one child said, and then the tree will be full grown and all its boughs have cherries red. Ah, children, what a life to lead! You love the flowers, but when they're past, no flowers are missed by your bright eyes, and when cold winter comes at last, snowflakes shall be your butterflies.",

"duration": 82,

"language": "en",

"segments": [

{

"id": 0,

"start": 0,

"end": 8.94,

"text": " CHILDREN AT PLAY by William Henry Davies Read for LibriVox.org by Anita Hibbard, September"

},

{

"id": 1,

"start": 8.94,

"end": 11.76,

"text": " 27, 2022"

},

[...]

{

"id": 13,

"start": 59.76,

"end": 66.24,

"text": " You love the flowers, but when they're past, no flowers are missed by your bright eyes,"

},

{

"id": 14,

"start": 66.24,

"end": 82,

"text": " and when cold winter comes at last, snowflakes shall be your butterflies."

}

],

"words": [

{

"id": 0,

"text": " Children",

"start": 0.75,

"end": 1.37

},

{

"id": 1,

"text": " at",

"start": 1.37,

"end": 1.65

},

[...]

{

"id": 165,

"text": " public",

"start": 75.77,

"end": 76.03

},

{

"id": 166,

"text": " domain.",

"start": 76.03,

"end": 76.43